Artificial Intelligence (AI) technology is steadily gaining traction in the legal sector, and some practitioners worry about the changes it’s bringing. Many legal professionals fear AI may infringe on their job security by significantly cutting the available jobs. A number of legal professionals raise ethical and legal concerns underlying the use of AI in their work. Still others wonder whether future attorneys will possess the skills needed to take advantage of this technology. This article will discuss a few of the valid concerns regarding the use of legal AI technology and explain why the benefits of AI outweigh the risks.

Will AI Make Lawyers Obsolete?

When technology performs better than humans at certain tasks, job losses seem inevitable. But the effect may not be as dire as some predict. Current AI technology isn’t necessarily the artificial superintelligence depicted in science fiction novels that can easily master diverse topics. However, many attorneys think of artificial superintelligence when they hear about the use of AI in the legal world. The bottom line? The dynamic role of an attorney — one that involves strategy, creativity, and persuasion — can’t be reduced to one or several AI programs.

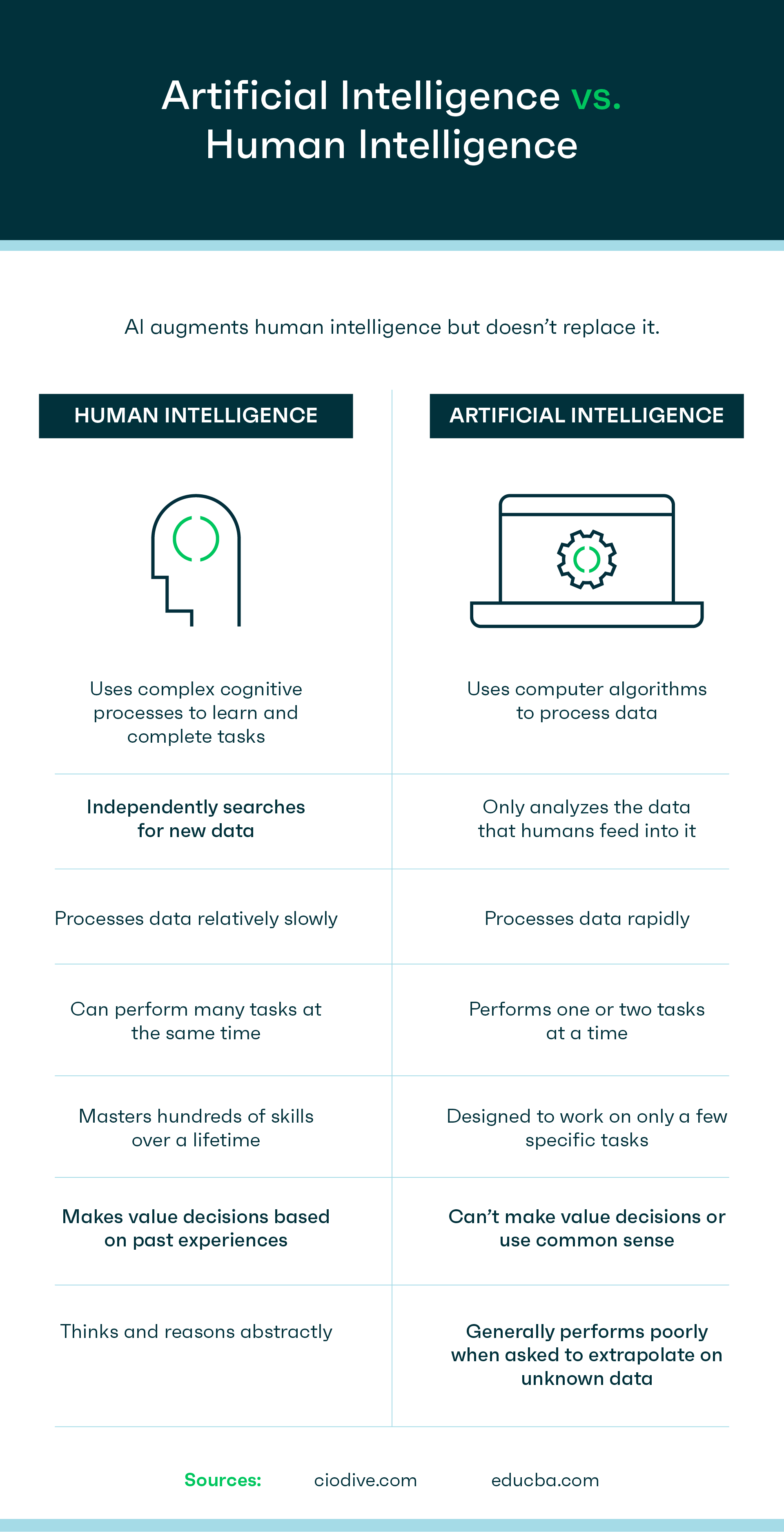

Many people believe AI technology works in the same way as a human brain, but that’s not the case. In his book Online Courts and the Future of Justice, author Richard Susskind discusses the mistaken impression that machines mimic the way humans work, which he terms an AI fallacy. Many current AI systems work in manners different from humans. For example, they review data using machine learning, or algorithms, rather than cognitive processes. AI is adept at processing data, but it can’t think abstractly or apply common sense as humans can. Thus, AI in the legal sector enhances the work of attorneys, but it can’t replace them.

Of course, as more firms and legal departments adopt AI technologies and as those technologies improve, some jobs in the legal sector may shrink, particularly roles involving routine tasks that can be automated — for instance, freelance document review attorneys. But historically, when technology displaces jobs, it creates jobs in other areas. AI has already created new legal positions, including AI legal knowledge engineers. Humans will remain indispensable to the practice of law, despite rising dependence on AI.

However, AI technology will change the way we work. For instance, document review projects can involve document sets that are unmanageable for a team of humans given their vast size, the cost of attorney reviewers, and deadlines. According to studies, AI’s accuracy outperforms that of humans in selecting relevant sources in document review. Additionally, because of the length of so many contracts, mistakes caused by human error are common. AI software decreases the time attorneys spend redlining contracts and increases the accuracy of the review.

The technology also frees up attorneys’ schedules by automating repetitious, monotonous tasks so attorneys can focus on devising better legal solutions for their clients as well as developing more strategic initiatives for their firms. With the application of AI software in contract management, attorneys can extract data and review contracts more rapidly. Doctoral candidate Beverly Rich, writing for Harvard Business Review, says her research suggests that firms with a significant volume of routine contracts “have generally seen an increase in productivity and efficiency in their contracting” when using AI software.

Ethical Concerns About AI in the Legal Sector

AI in the legal sector raises ethical concerns about competence, diligence, and oversight. The use of AI technology creates new situations that current ethics rules have yet to tackle. For instance, how does our duty of competence and diligence apply to the use of AI technology in the legal field?

Duty of competence

Attorneys are not computer scientists, and yet if technology impacts our duty to our clients, we have some obligation to understand why and how. The American Bar Association (ABA) recognizes this necessity. In 2012, the ABA’s House of Delegates amended Model Rule 1.1’s Comment 8 to include:

“Maintaining Competence: To maintain the requisite knowledge and skill, a lawyer should keep abreast of changes in the law and its practice, including the benefits and risks associated with relevant technology, engage in continuing study and education and comply with all continuing legal education requirements to which the lawyer is subject.”

To date, 38 states have adopted some version of this revised comment to Rule 1.1. In addition to a duty to be competent in the letter and practice of the law, attorneys in these states must also maintain competence in relevant technologies. Note that the comment does not only require knowledge of the risks but of the benefits as well. In the future, all states may consider it unethical to avoid technologies that could benefit one’s clients.

Black box challenge

When an attorney submits a query into AI software, it goes into what has been termed a “black box,” wherein the software works its magic and provides feedback to the user. But it’s difficult for humans to understand what happens in the black box — meaning, how the particular AI technology analyzes the queries and data to determine the output. Yavar Bathaee writes in the Harvard Journal of Law & Technology that our lack of understanding “may arise from the complexity of the algorithm’s structure, such as with a deep neural network” and “may arise because the AI is using a machine-learning algorithm that relies on geometric relationships that humans cannot visualize.”

AI is complicated for attorneys. To what depth must an attorney know the inner workings of that black box to ensure that she meets her ethical duties of competence and diligence? These problems will continue to play out as our reliance on technology increases and injustices arise.

The other issue with the AI black box is one of transparency—or lack thereof—by tech companies regarding the inner workings of the AI algorithms. For now, most law firms rely on third-party software providers rather than creating their own AI programs. But AI companies who create black-box AI technology often lack transparency in their operations. AI companies may have legitimate concerns that other companies will copy their trade secrets or hackers will attack their software. But law firms must consider and mitigate these risk factors when they decide to purchase and rely on AI software.

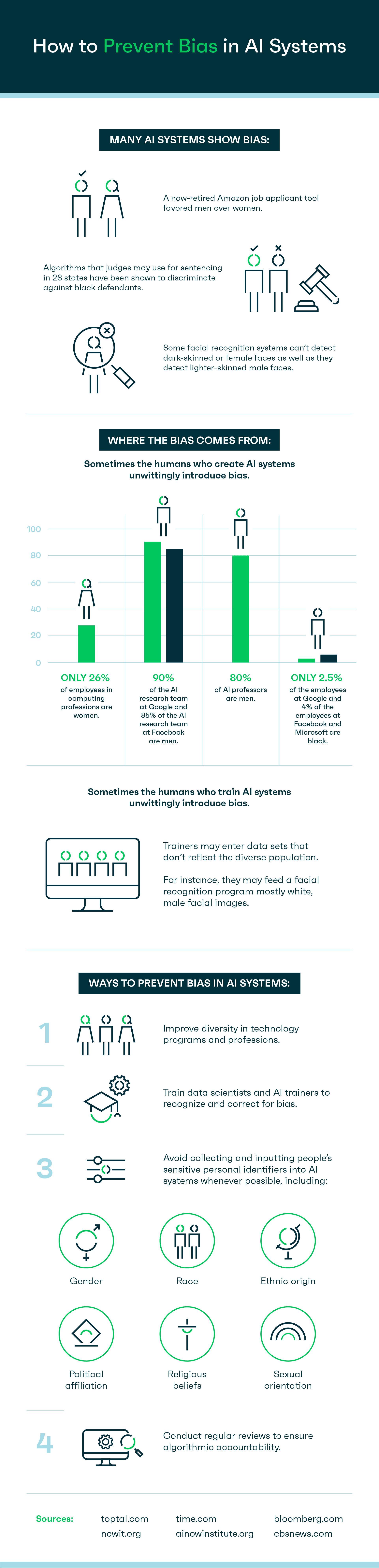

Potential of bias in AI

Humans oversee AI software, and we have inherent biases. Plus, despite its distinct method of problem-solving, AI technologies are also subject to bias. If the data we provide to AI software is biased or if the system’s methods of analyzing data are flawed, then the output will be biased too.

As an example, AI technology has shown bias in recruitment. In 2018, Amazon dispensed with a job applicant review tool it created because it favored men over women. Researchers have discovered that some algorithms, which judges may use to determine sentencing, discriminate against black defendants.

According to a study by New York University’s AI Now Institute, affluent white males predominantly found and build new AI technologies. And as a result, the machines “learn from and reinforce historical patterns of racial and gender discrimination.” In the NYU study, which took place over one year, researchers found that “within the spaces where AI is being created, and in the logic of how AI systems are designed, the cost of bias, harassment, and discrimination are borne by the same people: gender minorities, people of color, and other underrepresented groups. Similarly, the benefits of such systems, from profit to efficiency, accrue primarily to those already in positions of power, who again tend to be white, educated, and male.”

Because a large potential for injustice exists for underrepresented and less powerful groups, humans will need to conduct reviews to ensure algorithmic accountability. Tech-savvy attorneys may be the best people to fill this role. Attorneys may also need to advocate for changes in laws to protect consumers from the potential consequences of reliance on AI technology. (Once again, it’s clear that while AI may decrease opportunity in some areas of the law, it will increase opportunities in others.)

Will AI Technology Outpace Law School Curriculums?

Law schools must prepare students to practice law in the future, yet how well are they preparing their future lawyers to integrate their practice with new AI technologies? Speaking with Forbes, Song Richardson, Dean of the University of California-Irvine School of Law, says,

“What worries me is that we won’t have lawyers who understand algorithms and AI well enough to even know what questions to ask, nor judges who feel comfortable enough with these new technologies to rule on cases involving them,” says Richardson. In light of such valid concerns, it is becoming increasingly clear our law schools must prepare tomorrow’s lawyers to use the new technology.”

Not all law schools are evolving quickly, but a number of them are developing courses in artificial intelligence and machine learning. Some law schools, such as Georgia State University School of Law, are experimenting with ways to teach students how to work with AI software. The school’s Legal Analytics and Innovation Initiative allows law students to collaborate with computer science and business students. Together, they develop technologies to fix legal problems that, until now, have been unsolvable. For example, they’re building a predictive model for civil employment cases.

And the Legal Innovation and Technology Lab at Suffolk Law School is working on a project to improve access to the civil legal system by creating a machine-based algorithm to translate queries posed by laypersons into legal queries. For instance, if someone queries, “What can I do if my landlord refuses to clean my moldy apartment?” the program would deliver search results showing relevant legal issues, such as “constructive eviction.” By increasing collaboration between computer science and law students and increasing innovation, law schools can help decrease the justice gap (the number of people who don’t have access to the legal system).

Conclusion

Change and evolution of the law can feel anxiety-producing and uncomfortable. But in this instance, technology has the potential to become a lifesaving boost for the legal profession and increase the opportunity for justice for many people. AI provides firms with the means needed to remain competitive in the legal sector. And it helps clients without abundant financial resources resolve legal issues for less money. Human lawyers will still argue cases, make decisions, and write legal briefs. AI augments the skills of attorneys rather than replacing them.

Most attorneys went into the profession to make a difference—to work on engaging issues, to discover ways to diminish the justice gap, or to draft convincing arguments to hold government officials accountable. Whatever your passion or responsibility, AI-powered legal technology can help free you from mundane work and give you time for the work that excites you about being an attorney.